gRPC load balancing on Kubernetes (using Headless Service)

gRPC is one of the most popular modern RPC frameworks for inter-process communication. It’s a great choice for microservice architecture. And, undoubtedly, The most popular way to deploy a microservice application is Kubernetes.

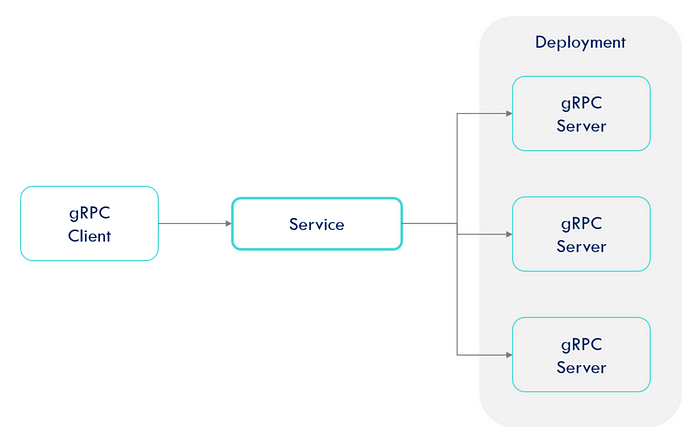

A Kubernetes deployment can have identical back-end instances serving many client requests. Kubernetes’s ClusterIP service provides load-balanced IP Addresses. But this default load balancing doesn’t work out of the box with gRPC.

If you use gRPC with many backends deployed on Kubernetes, this document is for you.

Why Load balancing?

A large-scale deployment has many identical back-end instances and many clients. Each backend server has a certain capacity. Load balancing is used for distributing the load from clients across available servers.

Before you start getting to know gRPC load balancing in Kubernetes in detail, let’s try to understand what are the benefits of load balancing.

Load balancing had many benefits and some of them are:

- Tolerance of failures: if one of your replicas fails, then other servers can serve the request.

- Increased Scalability: you can distribute user traffic across many servers increasing the scalability.

- Improved throughput: you can improve the throughput of the application by distributing traffic across various backend servers.

- No downside deployment: you can achieve no downtime deployment using rolling deployment techniques.

There are many other benefits of load balancing. You can read more about load balancer here.

Load Balancing options in gRPC

There are two types of load balancing options available in gRPC — proxy and client-side.

Proxy load balancing

In Proxy load balancing, the client issues RPCs to a Load Balancer (LB) proxy. The LB distributes the RPC call to one of the available backend servers that implement the actual logic for serving the call. The LB keeps track of load on each backend and implements algorithms for distributing load fairly. The clients themselves do not know about the backend servers. Clients can be untrusted. This architecture is typically used for user-facing services where clients from open internet can connect to the servers

Client-side load balancing

In Client-side load balancing, the client is aware of many backend servers and chooses one to use for each RPC. If the client wishes it can implement the load balancing algorithms based on load report from the server. For simple deployment, clients can round-robin requests between available servers.

For more information about the gRPC load balancing option, you can check the article gRPC Load Balancing.

Challenges associated with gRPC load balancing

gRPC works on HTTP/2. The TCP connection on the HHTP/2 is long-lived. A single connection can multiplex many requests. This reduces the overhead associated with connection management. But it also means that connection-level load balancing is not very useful. The default load balancing in Kubernetes is based on connection level load balancing. For that reason, Kubernetes default load balancing does not work with gRPC.

To confirm this hypothesis, let’s create a Kubernetes application. This application consists of -

- Server pod: Kubernetes deployment with three gRPC server pods.

- Client pod: Kubernetes deployment with one gRPC client pod.

- Service: A ClusterIP service, which selects all server pods.

Creating Server Deployment

To create a deployment, save the below code in a YAML file, say deployment-server.yaml, and run the command kubectl apply -f deployment-server.yaml.

This creates a gRPC server with three replicas. The gRPC server is running on port 8001.

To validate if the pod are created successfully run the command kubectl get pods.

You can run command kubectl logs --follow grpc-server-<> to see logs.

Creating Service

To create a service, save the below code in a YAML file, say service.yaml, and run the command kubectl apply -f service.yaml.

The ClusterIP service provides a load-balanced IP address. It load balances traffic across pod endpoints matched through label selector.

As seen above, IP addresses of the pods are — 10.244.0.11:8001,10.244.0.12:8001,10.244.0.13:8001. If a client makes a call to service on port 80 then it’ll load-balance call across endpoints (IP addresses of the pods). But this is not true for gRPC, which you’ll see shortly.

Creating Client Deployment

To create a client deployment, save the below code in a YAML file, say deployment-client.yaml, and run the command kubectl apply -f deployment-client.yaml

The gRPC client application makes 1,000,000 calls to the server in 10 concurrent threads using one channel at the startup. The SERVER_HOST environment variable point to the DNS of the service grpc-server-service. On the gRPC client, the channel is created, by passing SERVER_HOST ( serverHost) as:

ManagedChannelBuilder.forTarget(serverHost) .defaultLoadBalancingPolicy("round_robin") .usePlaintext() .build();If you check the server logs, you’ll notice that all client calls are served by one server pod only.

Client-side load balancing using headless service

You can do client-side round-robin load-balancing using Kubernetes headless service. This simple load balancing works out of the box with gRPC. The downside is that it does not take into account the load on the server.

What is Headless Service?

Luckily, Kubernetes allows clients to discover pod IPs through DNS lookups. Usually, when you perform a DNS lookup for a service, the DNS server returns a single IP — the service’s cluster IP. But if you tell Kubernetes you don’t need a cluster IP for your service (you do this by setting the clusterIP field to None in the service specification ), the DNS server will return the pod IPs instead of the single service IP. Instead of returning a single DNS A record, the DNS server will return multiple A records for the service, each pointing to the IP of an individual pod backing the service at that moment. Clients can therefore do a simple DNS A record lookup and get the IPs of all the pods that are part of the service. The client can then use that information to connect to one, many, or all of them.

Setting the clusterIP field in a service spec to None makes the service headless, as Kubernetes won’t assign it a cluster IP through which clients could connect to the pods backing it.

Kubernetes in Action — Marko Lukša

Define headless service as :

To make a service a headless service, the only field you need to change is to set .spec.clusterIP field as None.

Verifying DNS

To confirm the DNS of headless service, create a pod with image tutum/dnsutils as:

kubectl run dnsutils --image=tutum/dnsutils --command -- sleep infinityand then run command

kubectl exec dnsutils -- nslookup grpc-server-serviceThis return FQDN of headless service as:

As you can see headless service resolves into the IP address of all pods connected through service. Contrast this with the output returned for non-headless service.

Server: 10.96.0.10 Address: 10.96.0.10#53 Name: grpc-server-service.default.svc.cluster.local Address: 10.96.158.232Configuring client

The only change left is on the client application is to point to the headless service with the port of server pods, as:

Notice, SERVER_HOST now points to the headless service grpc-server-service and server port 8001.

You can also use SERVER_HOST as FQDN as:

name: SERVER_HOST

value: "grpc-server-service.default.svc.cluster.local:8001"If you deploy the client again by first deleting client deployment as :

kubectl delete deployment.apps/grpc-clientand then deploying the client again as:

kubectl apply -f deployment-client.yamlYou can see the logs printed by all server pods.

Code example

The working code example of this article is listed on GitHub . You can use run the code on the local Kubernetes cluster using kind.

Summary

There are two kinds of load balancing options available in gRPC — proxy and client-side. As gRPC connections are long-lived, the default connection-level load balancing of Kubernetes does not work with gRPC. Kubernetes headless service is one mechanism through which load balancing can be achieved. A Kubernetes headless service DNS resolves to the IP of the backing pods.

Further Reading

Originally published at https://techdozo.dev.